What Is Data Scraping And How Can You Use It?

Data Scraping

The only special information you want is the FEC ID for the candidate of curiosity. One of the inconveniences of an API is we don't get to specify how the info we obtain is formatted. This is a minor worth to pay contemplating all the opposite benefits APIs present.

Contents

Data of the same class are sometimes encoded into related pages by a typical script or template. In knowledge mining, a program that detects such templates in a particular info supply, extracts its content material and translates it into a relational type, is called a wrapper. Wrapper era algorithms assume that input pages of a wrapper induction system conform to a typical template and that they are often easily identified by way of a URL frequent scheme.

Screen Scraping

To illustrate, I will focus on the BLS employment statistics webpage which incorporates multiple HTML tables from which we will scrape data. However, if we are involved solely with specific content on the webpage then we need to make our HTML node selection process slightly more targeted. To do that we, we can use our browser’s developer instruments to examine the webpage we are scraping and get extra details on specific nodes of curiosity. If you are using Chrome or Firefox you can open the developer instruments by clicking F12 (Cmd + Opt + I for Mac) or for Safari you would use Command-Option-I.

Web Scraping

We used the urllib and requests libraries to send GET requests to pre-defined urls. The ‘json’ library puts the text knowledge right into a Python dictionary the place you can now reference varied sections of the JSON by name. Normally, a neighborhood file could correspond to an excel file, word file, or to say any Microsoft workplace application. In the Properties panel of the Excel Application Scope activity, within the WorkbookPath subject, sort "web_scraping.xlsx". Upon project execution, a file with this name is created in the project folder to store information from the scraping. Alternatively, you possibly can specify a file that already exists in your machine. That signifies that just because you possibly can log in to the web page by way of your browser, that doesn’t mean you’ll be able to scrape it along with your Python script. The web site you’re scraping in this tutorial serves static HTML content material. In this situation, the server that hosts the positioning sends again HTML paperwork that already include all the information you’ll get to see as a person. You’ll discover that modifications within the search box of the positioning are instantly reflected within the URL’s question parameters and vice versa. If you alter both of them, you then’ll see completely different results on the website. The knowledge we pulled is coming from a REST API in a “snapshot of time” format. So, to build a history over time, we would have liked to run our scraper at fixed time intervals to tug knowledge from the API after which write to the database. To effectively harvest that information, you’ll must turn out to be skilled at internet scraping. The Python libraries requests and Beautiful Soup are powerful tools for the job. If you wish to be taught with arms-on examples and you have a fundamental understanding of Python and HTML, then this tutorial is for you. The information scraping permits the consumer to scrape out only the knowledge that the person needs. Suppose the day trader desires to access the info from the website each day. The Wikipedia search lists 20 outcomes per page and, for our instance, we wish to extract the first three pages of search results. Basically a cron job allowed us to execute a shell script at fixed time intervals and we invoked our python scraper from inside that shell script. Each time the day trader presses the press the button, it ought to auto pull the market knowledge into excel. Now, the excel macro is ready with respect to performing the scraping functions. You also can apply another acquainted Python string methods to further clear up your text. When you add the 2 highlighted traces of code, you’re making a Beautiful Soup object that takes the HTML content you scraped earlier as its input. When you instantiate the item, you also instruct Beautiful Soup to make use of the suitable parser. As talked about before, what happens in the browser just isn't related to what occurs in your script.

USA Marijuana Dispensaries B2B Business Data List with Cannabis Dispensary Emailshttps://t.co/YUC0BtTaPi pic.twitter.com/clG0BmdFzd

— Creative Bear Tech (@CreativeBearTec) June 16, 2020

First, you extract time series from the info and then subset them to a point the place each corporations were in business and enough evaluate exercise is generated. If there are very large gaps within the knowledge for several months on end, then conclusions drawn from the info is much less reliable. For every of the information fields you write one extraction operate using the tags you observed. The strategy and instruments you should collect data utilizing APIs are outside the scope of this tutorial. When you use an API, the method is usually more steady than gathering the information through web scraping. That’s as a result of APIs are made to be consumed by packages, somewhat than by human eyes. If the design of an internet site modifications, then it doesn’t mean that the construction of the API has modified. Web scraping is the method of gathering info from the Internet. Anything associated to automation, information assortment, data evaluation, data mining, reporting, and any knowledge-associated project is our specialty. We have helped numerous firms cut operational prices and save time through our automation optimization providers. Our comprehensive reporting tools give our shoppers the competitive edge for data-pushed technique and execution. We ship on what we say with 24/7 buyer help to maintain your company's information needs working smoothly and on time. A good start line for further analysis is to take a look at how the month-by-month performance by score was for every company. Malicious scrapers, then again, crawl the website no matter what the location operator has allowed. Since all scraping bots have the same purpose—to access site knowledge—it can be troublesome to distinguish between respectable and malicious bots. Web scraping is also used for illegal purposes, together with the undercutting of prices and the theft of copyrighted content. An online entity focused by a scraper can undergo severe financial losses, particularly if it’s a business strongly counting on competitive pricing models or offers in content distribution.

Global Hemp Industry Database and CBD Shops B2B Business Data List with Emails https://t.co/nqcFYYyoWl pic.twitter.com/APybGxN9QC

— Creative Bear Tech (@CreativeBearTec) June 16, 2020

The subsequent step would display how the knowledge may be extracted from internet explorer utilizing VBA. Now the Excel file is able to interact with the web explorer. The next step would be to include macro scripts that may facilitate knowledge scraping in HTML. There are certain conditions that has to be carried out on the excel macro file earlier than moving into the process of data scraping in excel. The information scraping becomes simple when working on a analysis-based project on a daily basis, and such a project is purely dependent on the internet and web site. An extra option which is recommended by Hadley Wickham is to use selectorgadget.com, a Chrome extension, to assist determine the online web page parts you need2. At this level we may consider we now have all of the text desired and proceed with becoming a member of the paragraph (p_text) and record (ul_text or li_text) character strings and then carry out the specified textual analysis. However, we may now have captured more textual content than we were hoping for. For example, by scraping all lists we are additionally capturing the listed hyperlinks within the left margin of the webpage. However, its necessary to first cowl one of many primary elements of HTML parts as we will leverage this data to drag desired data. I offer only enough insight required to start scraping; I extremely suggest XML and Web Technologies for Data Sciences with R and Automated Data Collection with R to learn more about HTML and XML element buildings. These examples provide the basics required for downloading most tabular and Excel recordsdata from on-line. However, that is just the beginning of importing/scraping knowledge from the web. Your browser will diligently execute the JavaScript code it receives again from a server and create the DOM and HTML for you domestically. However, doing a request to a dynamic web site in your Python script will not offer you the HTML page content. That means you’ll want an account to be able to see (and scrape) anything from the page. The course of to make an HTTP request out of your Python script is totally different than the way you access a page out of your browser. If we have a look at the listing items that we scraped, we’ll see that these texts correspond to the left margin textual content. Vast amount of information exists throughout the interminable webpages that exist on-line. Much of this information are “unstructured” text that could be useful in our analyses. This part covers the basics of scraping these texts from online sources. Throughout this section I will illustrate the way to extract completely different text parts of webpages by dissecting the Wikipedia web page on web scraping. In reality, we will provide our first example of importing online tabular knowledge by downloading the Data.gov .csv file that lists all the federal agencies that offer knowledge to Data.gov. Beautiful Soup is filled with useful functionality to parse HTML data.

JustCBD CBD Bath Bombs & Hemp Soap - CBD SkinCare and Beauty @JustCbd https://t.co/UvK0e9O2c9 pic.twitter.com/P9WBRC30P6

— Creative Bear Tech (@CreativeBearTec) April 27, 2020

- An example could be to find and replica names and cellphone numbers, or companies and their URLs, to an inventory (contact scraping).

- Newer forms of internet scraping involve listening to data feeds from internet servers.

- Web scrapers sometimes take something out of a web page, to utilize it for one more objective elsewhere.

- However, most web pages are designed for human end-customers and not for ease of automated use.

- Web pages are built using textual content-primarily based mark-up languages (HTML and XHTML), and frequently comprise a wealth of useful knowledge in text form.

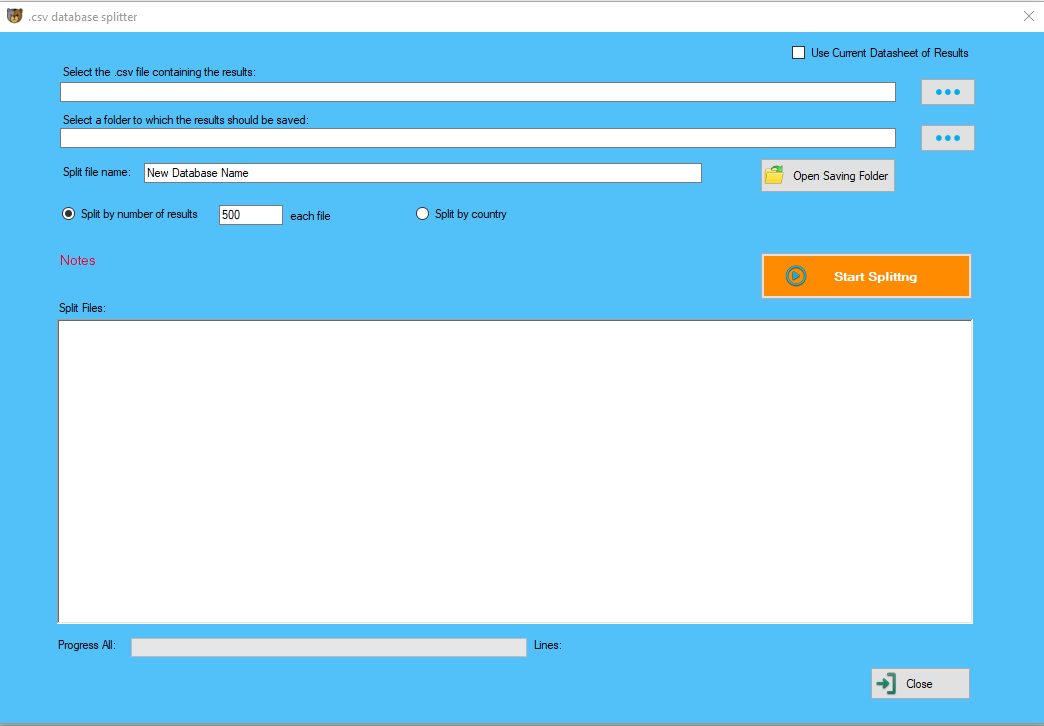

Downloading Excel spreadsheets hosted online may be performed simply as simply. Recall that there's not a base R operate for importing Excel knowledge; however, several packages exist to handle this functionality. From small one-time project to excessive quantity every day/weekly or monthly knowledge feeds, we've the solution and experience to ship. Let the expert data scraping team build, maintain, and host your data scraping project. Data scraping is the approach that helps in the extraction of desired information from a HTML internet web page to an area file present in your local machine. Even copy-pasting the lyrics of your favorite track is a form of net scraping! However, the words “internet scraping” often refer to a process that involves automation. Some websites don’t like it when automated scrapers gather their data, whereas others don’t thoughts. However, when you intend to make use of data regularly scraping in your work, you might find a dedicated data scraping device more effective. Setting up a dynamic net query in Microsoft Excel is a simple, versatile data scraping method that lets you set up a knowledge feed from an exterior website (or multiple websites) right into a spreadsheet. Let’s undergo how to set up a simple data scraping action utilizing Excel. Data scraping has an unlimited variety of functions – it’s helpful in nearly any case the place information needs to be moved from one place to another. But none are simple and versatile enough to tackle every Web Scraping / Crawling task.  It retrieves the HTML knowledge that the server sends back and stores that data in a Python object. Some web site suppliers supply Application Programming Interfaces (APIs) that permit you to access their data in a predefined manner. With APIs, you possibly can avoid parsing HTML and as a substitute entry the information immediately utilizing formats like JSON and XML. The unbelievable amount of knowledge on the Internet is a wealthy resource for any area of analysis or private interest. It’s a trusted and useful companion in your internet scraping adventures. Its documentation is complete and relatively person-pleasant to get began with. You’ll discover that Beautiful Soup will cater to most of your parsing needs, from navigating to superior looking via the results. By now, you’ve successfully harnessed the facility and user-pleasant design of Python’s requests library. With only some traces of code, you managed to scrape the static HTML content from the web and make it available for additional processing. This is the place the magic occurs, often neglected by most Web Scrapers. In case where an internet page is loaded in one go this may not be of a lot curiosity to you – as anyway you will want to scrape the text / information proper of the HTML page. However, in lots of circumstances modern webpages utilize web service calls or AJAX calls. Selenium is an elaborate solution designed for simulating multiple completely different browsers starting from IE to Chrome.

It retrieves the HTML knowledge that the server sends back and stores that data in a Python object. Some web site suppliers supply Application Programming Interfaces (APIs) that permit you to access their data in a predefined manner. With APIs, you possibly can avoid parsing HTML and as a substitute entry the information immediately utilizing formats like JSON and XML. The unbelievable amount of knowledge on the Internet is a wealthy resource for any area of analysis or private interest. It’s a trusted and useful companion in your internet scraping adventures. Its documentation is complete and relatively person-pleasant to get began with. You’ll discover that Beautiful Soup will cater to most of your parsing needs, from navigating to superior looking via the results. By now, you’ve successfully harnessed the facility and user-pleasant design of Python’s requests library. With only some traces of code, you managed to scrape the static HTML content from the web and make it available for additional processing. This is the place the magic occurs, often neglected by most Web Scrapers. In case where an internet page is loaded in one go this may not be of a lot curiosity to you – as anyway you will want to scrape the text / information proper of the HTML page. However, in lots of circumstances modern webpages utilize web service calls or AJAX calls. Selenium is an elaborate solution designed for simulating multiple completely different browsers starting from IE to Chrome.

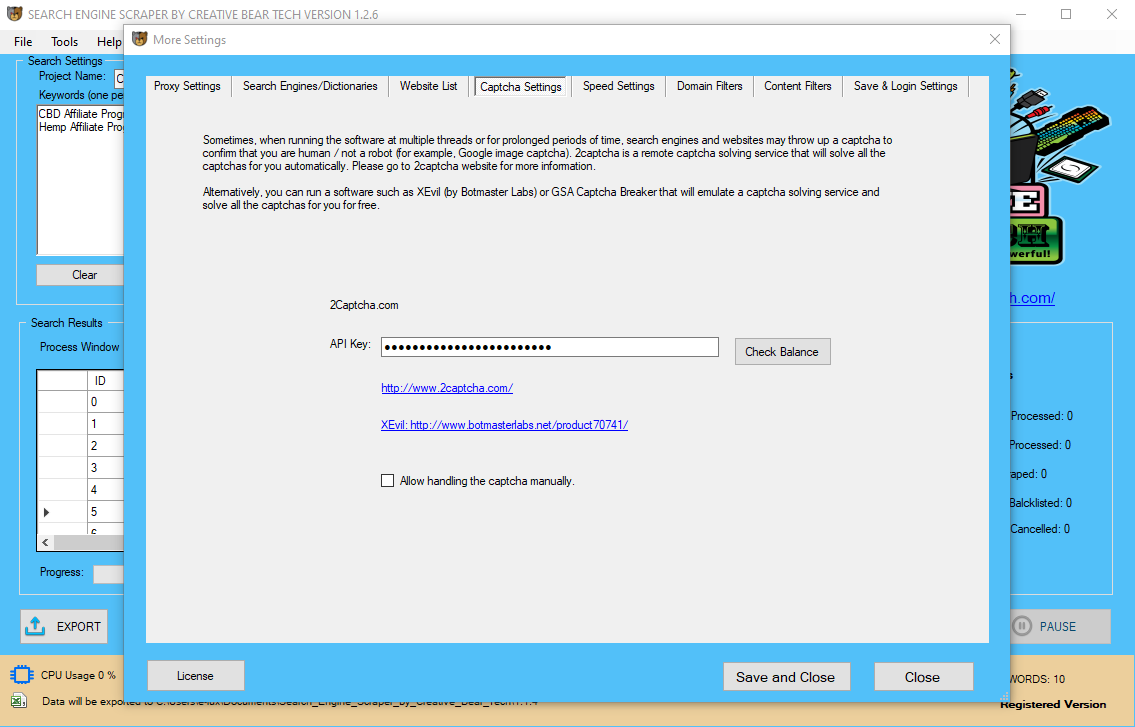

Sneak Peek Preview of the next update to the search engine scraper and email extractor ???? ???? ????

— Creative Bear Tech (@CreativeBearTec) October 15, 2019

Public proxy support and in-built checker

Integration of TOR browser

Ability to grab business name from Facebook

Download your copy at https://t.co/wQ3PtYVaNv pic.twitter.com/VRAQtRkTTZ

If you are faced with a extra sophisticated instance then just a single HTML table then Import.io may be the tool for you. Want to scrape all Selenium Scraping the merchandise gadgets of an e-commerce web site? file, which lists these pages a bot is permitted to access and those it cannot. Once we understand the received information format we are able to usually re-format utilizing somewhat listing subsetting and for looping. The key info you may be concerned about is contained within the series identifier. Honestly the options are lots see here a complete record on Wiki of all of the GUI testing instruments out there. You will find many blogs on the market telling you possibly can EASILY earn real cash on the net – most are scams, whereas others received’t let you earn spectacular money. Web Scraping is an trustworthy means of constructing precise money in a repeatable manner by selling scraped data, making online Internet analyses or simply taking freelance web-scraping jobs. IE Developer ToolsWhen you open the IE Developer Tools window you will typically leverage the press factor feature – to find HTML factor on a HTML web page (the cursor icon in the left upper corner). This is among the most regularly used options, however, as a Web Scraper you have to also study to Network tab (comparable name in Chrome). Each part of this collection code has meaning and may be adjusted to get specific Mass Layoff information. The BLS provides this breakdown for what every component means along with the obtainable list of codes for this knowledge set. For instance, the S00 (MLUMS00NN ) part represents the division/state. S00 will pull for all states however I may change to D30 to tug information for the Midwest or S39 to tug for Ohio. We used ‘mysql.connector’ and ‘sqlalchemy’ libraries in Python to push data into MySQL hosted as a part of RDS. Once we've our connection object, we merely invoke the .to_sql() perform of a pandas dataframe to write on to the database. We wanted a machine- ideally linux primarily based, that could run python scripts efficiently. As is the case with most information scraping duties, a small field would do the job simply fine. Here, we will be scraping knowledge on a BBC article that was just lately posted. Another common construction of data storage on the Web is in the form of HTML tables. This section reiterates a number of the info from the previous part; however, we focus solely on scraping knowledge from HTML tables. The simplest strategy to scraping HTML desk information immediately into R is by utilizing either the rvest package deal or the XML package deal. Data scraping allows you to extract structured data from your browser, software or document to a database, .csv file or even Excel spreadsheet. In the United States district court docket for the jap district of Virginia, the courtroom ruled that the terms of use should be delivered to the customers' attention In order for a browse wrap contract or license to be enforced. QVC's complaint alleges that the defendant disguised its internet crawler to masks its supply IP tackle and thus prevented QVC from shortly repairing the issue. This is a very fascinating scraping case as a result of QVC is in search of damages for the unavailability of their website, which QVC claims was caused by Resultly. Many web sites have giant collections of pages generated dynamically from an underlying structured supply like a database. One package deal that works smoothly with pulling Excel data from urls is gdata. With gdata we can use read.xls() to download this Fair Market Rents for Section eight Housing Excel file from the given url. The most simple type of getting knowledge from on-line is to import tabular (i.e. .txt, .csv) or Excel files that are being hosted online. This is commonly not thought of net scraping1; nevertheless, I assume its a great place to start introducing the person to interacting with the web for obtaining knowledge. Importing tabular data is very frequent for the various forms of government data available on-line. Next, we’ll begin exploring the more typical types of scraping textual content and data saved in HTML webpages. The filtered outcomes will only present hyperlinks to job alternatives that include python in their title. You can use the identical square-bracket notation to extract other HTML attributes as well. It was designed both for Web Scraping and building check scenarios for Web Developers. Selenium is on the market in lots of programming environments C#, Java, Python. I personally favor python as there's not that a lot need for Objective Oriented Programming when constructing most Web Scrapers. To further illustrate on the topic, let us take the instance of a day dealer who runs an excel macro for pulling market information from a finance web site into an excel sheet using VBA. Add an Excel Application Scope exercise underneath the Data Scraping sequence. In the Variables panel, change the scope of the routinely generated ExtractDataTable variable to Sequence. Do this to make the variable available outside of its current scope, the Data Scraping sequence. The blsAPI permits users to request knowledge for one or a number of sequence via the U.S. Bureau of Labor Statistics API. To use the blsAPI app you solely want information on the information; no key or OAuth are required. I lllustrate by pulling Mass Layoff Statistics information but search engine scraper python you can see all the obtainable information sets and their series code information here. Most pre-constructed API packages have already got this connection established but when using httr you’ll need to specify. Moreover, some semi-structured knowledge query languages, corresponding to XQuery and the HTQL, can be utilized to parse HTML pages and to retrieve and remodel page content. Try finding a listing of useful contacts on Twitter, and import the info utilizing information scraping. This provides you with a style of how the process can match into your everyday work. Getting to grips with using dynamic web queries in Excel is a useful approach to acquire an understanding of knowledge scraping. A frequent use case is to fetch the URL of a link, as you did above. Run the above code snippet and also you’ll see the text content displayed. Since you’re now working with Python strings, you'll be able to .strip() the superfluous whitespace. At this level slightly trial-and-error is needed to get the precise knowledge you need. Sometimes you can see that further items are tagged, so you need to reduce the output manually. In this case every tweet is saved as an individual record item and a full vary of knowledge are supplied for every tweet (i.e. id, textual content, consumer, geo location, favorite depend, and so on). The N0001 (MLUMS00NN ) element represents the business/demographics. N0001 pulls information for all industries however I could change to N0008 to drag information for the food trade or C00A2 for all individuals age 30-forty four. When you discover URLs, you will get data on how to retrieve information from the website’s server. Any job you’ll seek for on this website will use the identical base URL. However, the question parameters will change depending on what you’re on the lookout for. You can consider them as query strings that get sent to the database to retrieve particular information. You can see that there’s a listing of jobs returned on the left aspect, and there are more detailed descriptions in regards to the selected job on the right aspect. When you click on any of the jobs on the left, the content on the best modifications. You also can see that whenever you work together with the website, the URL in your browser’s address bar also changes. In this tutorial, you’ll build a web scraper that fetches Software Developer job listings from the Monster job aggregator website. Your internet scraper will parse the HTML to pick out the related items of knowledge and filter that content for specific phrases.  For occasion, we will see that the first tweet was by FiveThirtyEight regarding American politics and, at the time of this analysis, has been favorited by 3 people. So if I’m excited about evaluating the rise in price versus the rise in scholar debt I can merely subset for this knowledge as soon as I’ve identified its location and naming structure. Note that for this subsetting we use the magrittr bundle and the sapply function; both are covered in additional detail of their relevant sections. This is just meant for instance the kinds of information out there through this API. We can use the campaign finance API and capabilities to gain some perception into Trumps compaign income and expenditures.

For occasion, we will see that the first tweet was by FiveThirtyEight regarding American politics and, at the time of this analysis, has been favorited by 3 people. So if I’m excited about evaluating the rise in price versus the rise in scholar debt I can merely subset for this knowledge as soon as I’ve identified its location and naming structure. Note that for this subsetting we use the magrittr bundle and the sapply function; both are covered in additional detail of their relevant sections. This is just meant for instance the kinds of information out there through this API. We can use the campaign finance API and capabilities to gain some perception into Trumps compaign income and expenditures.

Just CBD makes a great relaxing CBD Cream for all your aches and pains! Visit our website to see the @justcbd collection! ???? #haveanicedaycbd #justcbd

— haveanicedaycbd (@haveanicedaycbd) January 23, 2020

-https://t.co/pYsVn5v9vF pic.twitter.com/RKJHa4Kk0J